from 3D Graphics

This chapter discusses a number of mathematical issues that arise when creating 3D graphics on a computer. Of course, we cannot hope to cover the vast subject of computer graphics in any amount of detail in a single chapter. Entire books are written that merely survey the topic. This chapter is to graphics what this entire book is to interactive 3D applications: it presents an extremely brief and high level overview of the subject matter, focusing on topics for which mathematics plays a critical role. Just like the rest of this book, we try to pay special attention to those topics that, from our experience, are glossed over in other sources or are a source of confusion in beginners.

To be a bit more direct: this chapter alone is not enough to teach you how to get some pretty pictures on the screen. However, it should be used parallel with (or preceding!) some other course, book, or self-study on graphics, and we hope that it will help you breeze past a few traditional sticky points. Although we present some example snippets in High Level Shading Language (HLSL) at the end of this chapter, you will not find much else to help you figure out which DirectX or OpenGL function calls to make to achieve some desired effect. These issues are certainly of supreme practical importance, but alas, they are also in the category of knowledge that Robert Maynard Hutchins dubbed “rapidly aging facts,” and we have tried to avoid writing a book that requires an update every other year when ATI releases a new card or Microsoft a new version of DirectX. Luckily, up-to-date API references and examples abound on the Internet, which is a much more appropriate place to get that sort of thing. (API stands for application programming interface. In this chapter, API will mean the software that we use to communicate with the rendering subsystem.)

One final caveat is that since this is a book on math for video games, we will have a real-time bias. This is not to say that the book cannot be used if you are interested in learning how to write a raytracer; only that our expertise and focus is in real-time graphics.

This chapter proceeds roughly in order from ivory tower theory to down-and-dirty code snippets.

- Section 10.1 gives a very high-level (and high-brow) theoretical approach to graphics, culminating in the rendering equation.

-

We then lower our brows somewhat to focus attention on matters of more direct practical

application, while still maintaining our platform independence and attempt to be relevant ten

years from now.

- Section 10.2 discusses some basic mathematics related to viewing in 3D.

- Section 10.3 introduces some important coordinate spaces and transformations.

- Section 10.4 looks at how to represent the surfaces of the geometry in our scene using a polygon mesh.

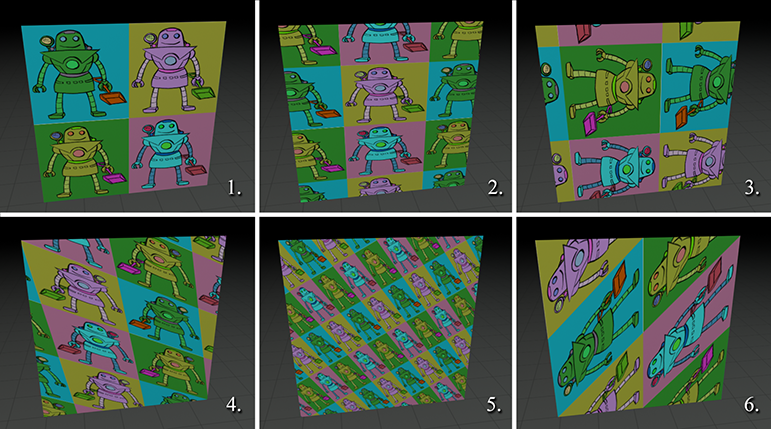

- Section 10.5 shows how to control material properties (such as the “color” of the object) using texture maps.

-

The next sections are about lighting.

- Section 10.6 defines the ubiquitous Blinn-Phong lighting model.

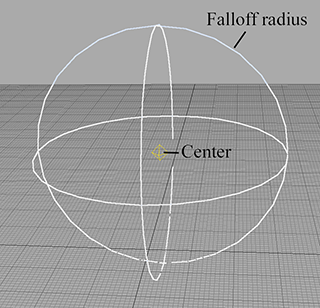

- Section 10.7 discusses some common methods for representing light sources.

-

With a little nudge further away from timeless theory, the next sections discuss

two issues of particular contemporary interest.

- Section 10.8 is about skeletal animation.

- Section 10.9 tells how bump mapping works.

-

The last third of this chapter is the most in danger of becoming irrelevant in

coming years, because it is the most immediately practical.

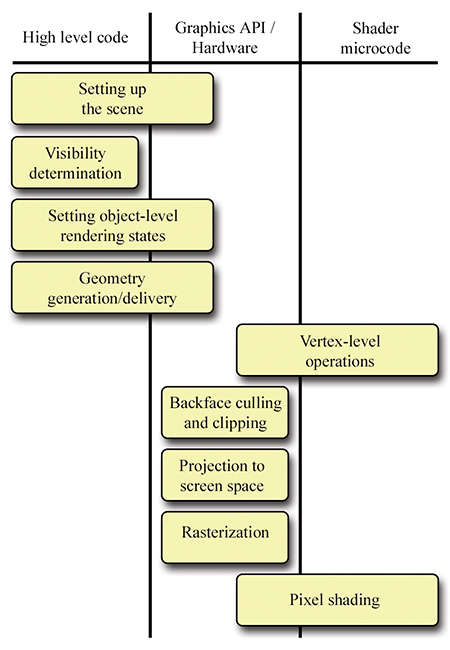

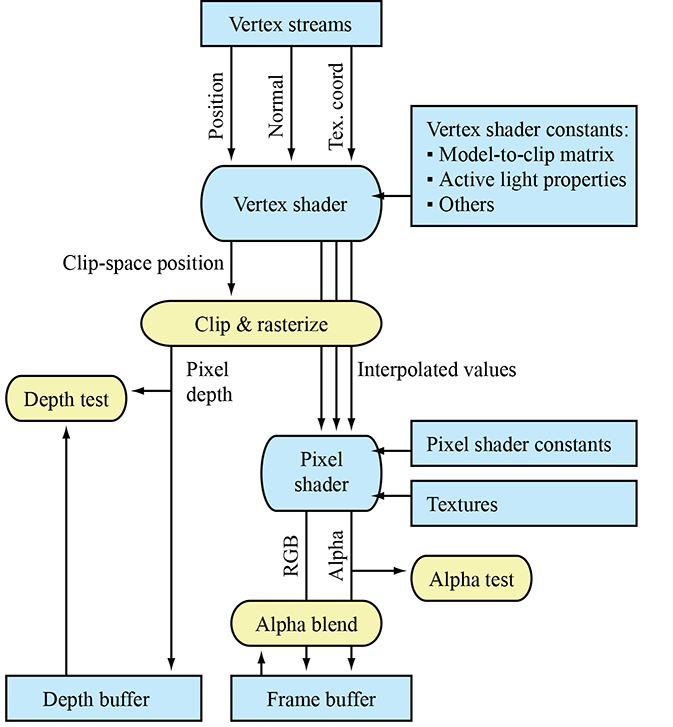

- Section 10.10 gives an overview of a simple real-time graphics pipeline, and then descends that pipeline and talks about some mathematical issues along the way.

- Section 10.11 concludes the chapter squarely in the “rapidly aging facts” territory with several HLSL examples demonstrating some of the techniques covered earlier.

10.1How Graphics Works

We begin our discussion of graphics by telling you how things really work, or perhaps more accurately, how they really should work, if we had enough knowledge and processing power to make things work the right way. The beginner student is to be warned that much introductory material (especially tutorials on the Internet) and API documentation suffers from a great lack of perspective. You might get the impression from reading these sources that diffuse maps, Blinn-Phong shading, and ambient occlusion are “The way images in the real world work,” when in fact you are probably reading a description of how one particular lighting model was implemented in one particular language on one particular piece of hardware through one particular API. Ultimately, any down-to-the-details tutorial must choose a lighting model, language, platform, color representation, performance goals, etc.—as we will have to do later in this chapter. (This lack of perspective is usually purposeful and warranted.) However, we think it's important to know which are the fundamental and timeless principles, and which are arbitrary choices based on approximations and trade-offs, guided by technological limitations that might by applicable only to real-time rendering, or are likely to change in the near future. So before we get too far into the details of the particular type of rendering most useful for introductory real-time graphics, we want to take our stab at describing how rendering really works.

We also hasten to add that this discussion assumes that the goal is photorealism, simulating how things work in nature. In fact, this is often not the goal, and it certainly is never the only goal. Understanding how nature works is a very important starting place, but artistic and practical factors often dictate a different strategy than just simulating nature.

10.1.1The Two Major Approaches to Rendering

We begin with the end in mind. The end goal of rendering is a bitmap, or perhaps a sequence of bitmaps if we are producing an animation. You almost certainly already know that a bitmap is a rectangular array of colors, and each grid entry is known as pixel, which is short for “picture element.” At the time we are producing the image, this bitmap is also known as the frame buffer, and often there is additional post-processing or conversion that happens when we copy the frame buffer to the final bitmap output.

How do we determine the color of each pixel? That is the fundamental question of rendering. Like so many challenges in computer science, a great place to start is by investigating how nature works.

We see light. The image that we perceive is the result of light that bounces around the environment and finally enters the eye. This process is complicated, to say the least. Not only is the physics1 of the light bouncing around very complicated, but so are the physiology of the sensing equipment in our eyes2 and the interpreting mechanisms in our minds. Thus, ignoring a great number of details and variations (as any introductory book must do), the basic question that any rendering system must answer for each pixel is “What color of light is approaching the camera from the direction corresponding to this pixel?”

There are basically two cases to consider. Either we are looking directly at a light source and light traveled directly from the light source to our eye, or (more commonly) light departed from a light source in some other direction, bounced one or more times, and then entered our eye. We can decompose the key question asked previously into two tasks. This book calls these two tasks the rendering algorithm, although these two highly abstracted procedures obviously conceal a great deal of complexity about the actual algorithms used in practice to implement it.

The rendering algorithm- Visible surface determination. Find the surface that is closest to the eye, in the direction corresponding to the current pixel.

- Lighting. Determine what light is emitted and/or reflected off this surface in the direction of the eye.

At this point it appears that we have made some gross simplifications, and many of you no doubt are raising your metaphorical hands to ask “What about translucency?” “What about reflections?” “What about refraction?” “What about atmospheric effects?” Please hold all questions until the end of the presentation.

The first step in the rendering algorithm is known as visible surface determination. There are two common solutions to this problem. The first is known as raytracing. Rather than following light rays in the direction that they travel from the emissive surfaces, we trace the rays backward, so that we can deal only with the light rays that matter: the ones that enter our eye from the given direction. We send a ray out from the eye in the direction through the center of each pixel3 to see the first object in the scene this ray strikes. Then we compute the color that is being emitted or reflected from that surface back in the direction of the ray. A highly simplified summary of this algorithm is illustrated by Listing 10.1.

for (each x,y screen pixel) {

// Select a ray for this pixel

Ray ray = getRayForPixel(x,y);

// Intersect the ray against the geometry. This will

// not just return the point of intersection, but also

// a surface normal and some other information needed

// to shade the point, such as an object reference,

// material information, local S,T coordinates, etc.

// Don't take this pseudocode too literally.

Vector3 pos, normal;

Object *obj; Material *mtl;

if (rayIntersectScene(ray, pos, normal, obj, mtl)) {

// Shade the intersection point. (What light is

// emitted/reflected from this point towards the camera?)

Color c = shadePoint(ray, pos, normal, obj, mtl);

// Put it into the frame buffer

writeFrameBuffer(x,y, c);

} else {

// Ray missed the entire scene. Just use a generic

// background color at this pixel

writeFrameBuffer(x,y, backgroundColor);

}

}

The other major strategy for visible surface determination, the one used for real-time rendering at the time of this writing, is known as depth buffering. The basic plan is that at each pixel we store not only a color value, but also a depth value. This depth buffer value records the distance from the eye to the surface that is reflecting or emitting the light used to determine the color for that pixel. As illustrated in Listing 10.1, the “outer loop” of a raytracer is the screen-space pixels, but in real-time graphics, the “outer loop” is the geometric elements that make up the surface of the scene.

The different methods for describing surfaces are not important here. What is important is that we can project the surface onto screen-space and map them to screen-space pixels through a process known as rasterization. For each pixel of the surface, known as the source fragment, we compute the depth of the surface at that pixel and compare it to the existing value in the depth buffer, sometimes known as the destination fragment. If the source fragment we are currently rendering is farther away from the camera than the existing value in the buffer, then whatever we rendered before this is obscuring the surface we are now rendering (at least at this one pixel), and we move on to the next pixel. However, if our depth value is closer than the existing value in the depth buffer, then we know this is the closest surface to the eye (at least of those rendered so far) and so we update the depth buffer with this new, closer depth value. At this point we might also proceed to step 2 of the rendering algorithm (at least for this pixel) and update the frame buffer with the color of the light being emitted or reflected from the surface that point. This is known as forward rendering, and the basic idea is illustrated by Listing 10.2.

// Clear the frame and depth buffers

fillFrameBuffer(backgroundColor);

fillDepthBuffer(infinity);

// Outer loop iterates over all the primitives (usually triangles)

for (each geometric primitive) {

// Rasterize the primitive

for (each pixel x,y in the projection of the primitive) {

// Test the depth buffer, to see if a closer pixel has

// already been written.

float primDepth = getDepthOfPrimitiveAtPixel(x,y);

if (primDepth > readDepthBuffer(x,y)) {

// Pixel of this primitive is obscured, discard it

continue;

}

// Determine primitive color at this pixel.

Color c = getColorOfPrimitiveAtPixel(x,y);

// Update the color and depth buffers

writeFrameBuffer(x,y, c);

writeDepthBuffer(x,y, primDepth);

}

}

Opposed to forward rendering is deferred rendering, an old technique that is becoming popular again due to the current location of bottlenecks in the types of images we are producing and the hardware we are using to produce them. A deferred renderer uses, in addition to the frame buffer and the depth buffer, additional buffers, collectively known as the G-buffer (short for “geometry” buffer), which holds extra information about the surface closest to the eye at that location, such as the 3D location of the surface, the surface normal, and material properties needed for lighting calculations, such as the “color” of the object and how “shiny” it is at that particular location. (Later, we see how those intuitive terms in quotes are a bit too vague for rendering purposes.) Compared to a forward renderer, a deferred renderer follows our two-step rendering algorithm a bit more literally. First we “render” the scene into the G-buffer, essentially performing only visibility determination—fetching the material properties of the point that is “seen” by each pixel but not yet performing lighting calculations. The second pass actually performs the lighting calculations. Listing 10.3 explains deferred rendering in pseudocode.

// Clear the geometry and depth buffers

clearGeometryBuffer();

fillDepthBuffer(infinity);

// Rasterize all primitives into the G-buffer

for (each geometric primitive) {

for (each pixel x,y in the projection of the primitive) {

// Test the depth buffer, to see if a closer pixel has

// already been written.

float primDepth = getDepthOfPrimitiveAtPixel(x,y);

if (primDepth > readDepthBuffer(x,y)) {

// Pixel of this primitive is obscured, discard it

continue;

}

// Fetch information needed for shading in the next pass.

MaterialInfo mtlInfo;

Vector3 pos, normal;

getPrimitiveShadingInfo(mtlInfo, pos, normal);

// Save it off into the G-buffer and depth buffer

writeGeometryBuffer(x,y, mtlInfo, pos, normal);

writeDepthBuffer(x,y, primDepth);

}

}

// Now perform shading in a 2nd pass, in screen space

for (each x,y screen pixel) {

if (readDepthBuffer(x,y) == infinity) {

// No geometry here. Just write a background color

writeFrameBuffer(x,y, backgroundColor);

} else {

// Fetch shading info back from the geometry buffer

MaterialInfo mtlInfo;

Vector3 pos, normal;

readGeometryBuffer(x,y, mtlInfo, pos, normal);

// Shade the point

Color c = shadePoint(pos, normal, mtlInfo);

// Put it into the frame buffer

writeFrameBuffer(x,y, c);

}

}

Pseudocode for deferred rendering using the depth buffer

Before moving on, we must mention one important point about why deferred rendering is popular. When multiple light sources illuminate the same surface point, hardware limitations or performance factors may prevent us from computing the final color of a pixel in a single calculation, as was shown in the pseudocode listings for both forward and deferred rendering. Instead, we must using multiple passes, one pass for each light, and accumulate the reflected light from each light source into the frame buffer. In forward rendering, these extra passes involve rerendering the primitives. Under deferred rendering, however, extra passes are in image space, and thus depend on the 2D size of the light in screen space, not on the complexity of the scene! It is in this situation that deferred rendering really begins to have large performance advantages over forward rendering.

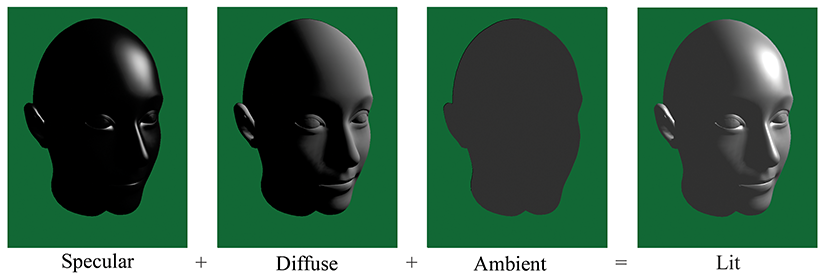

10.1.2Describing Surface Properties: The BRDF

Now let's talk about the second step in the rendering algorithm: lighting. Once we have located the surface closest to the eye, we must determine the amount of light emitted directly from that surface, or emitted from some other source and reflected off the surface in the direction of the eye. The light directly transmitted from a surface to the eye—for example, when looking directly at a light bulb or the sun—is the simplest case. These emissive surfaces are a small minority in most scenes; most surfaces do not emit their own light, but rather they only reflect light that was emitted from somewhere else. We will focus the bulk of our attention on the nonemissive surfaces.

Although we often speak informally about the “color” of an object, we know that the perceived color of an object is actually the light that is entering our eye, and thus can depend on many different factors. Important questions to ask are: What colors of light are incident on the surface, and from what directions? From which direction are we viewing the surface? How “shiny” is the object?4 So a description of a surface suitable for use in rendering doesn't answer the question “What color is this surface?” This question is sometimes meaningless—what color is a mirror, for example? Instead, the salient question is a bit more complicated, and it goes something like, “When light of a given color strikes the surface from a given incident direction, how much of that light is reflected in some other particular direction?” The answer to this question is given by the bidirectional reflectance distribution function, or BRDF for short. So rather than “What color is the object?” we ask, “What is the distribution of reflected light?”

Symbolically, we write the BRDF as the function

Although we are particularly interested in the incident directions that come from emissive surfaces and the outgoing directions that point towards our eye, in general, the entire distribution is relevant. First of all, lights, eyes, and surfaces can move around, so in the context of creating a surface description (for example, “red leather”), we don't know which directions will be important. But even in a particular scene with all the surfaces, lights, and eyes fixed, light can bounce around multiple times, so we need to measure light reflections for arbitrary pairs of directions.

Before moving on, it's highly instructive to see how the two intuitive material properties that

were earlier disparaged, color and shininess, can be expressed precisely in the framework of a

BRDF. Consider a green ball. A green object is green and not blue because it reflects incident

light that is green more strongly than incident light of any other color.6 For example, perhaps green

light is almost all reflected, with only a small fraction absorbed, while 95%of the blue and

red light is absorbed and only 5%of light at those wavelengths is reflected in various

directions. White light actually consists of all the different colors of light, so a green object

essentially filters out colors other than green. If a different object responded to green and red

light in the same manner as our green ball, but absorbed 50%of the blue light and reflected the

other 50%, we might perceive the object as teal. Or if most of the light at all wavelengths was

absorbed, except for a small amount of green light, then we would perceive it as a dark shade of

green. To summarize, a BRDF accounts for the difference in color between two objects through the

dependence on

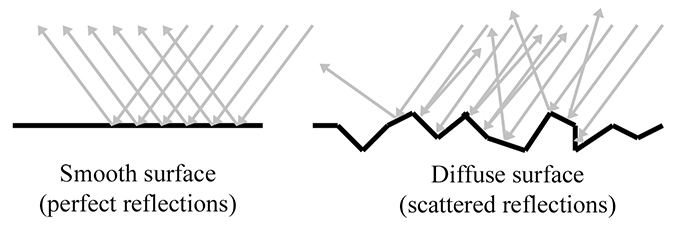

Next, consider the difference between shiny red plastic and diffuse red construction paper. A

shiny surface reflects incident light much more strongly in one particular direction compared to

others, whereas a diffuse surface scatters light more evenly across all outgoing directions. A

perfect reflector, such as a mirror, would reflect all the light from one incoming direction in a

single outgoing direction, whereas a perfectly diffuse surface would reflect light equally in all

outgoing directions, regardless of the direction of incidence. In summary, a BRDF accounts for

the difference in “shininess” of two objects through its dependence on

More complicated phenomena can be expressed by generalizing the BRDF. Translucence and light

refraction can be easily incorporated by allowing the direction vectors to point back into the

surface. We might call this mathematical generalization a bidirectional surface scattering

distribution function (BSSDF). Sometimes light strikes an object, bounces around inside of it,

and then exits at a different point. This phenomenon is known as

subsurface scattering and

is an important aspect of the appearances of many common substances, such as skin and milk. This

requires splitting the single reflection point

By the way, there are certain criteria that a BRDF must satisfy in order to be physically plausible. First, it doesn't make sense for a negative amount of light to be reflected in any direction. Second, it's not possible for the total reflected light to be more than the light that was incident, although the surface may absorb some energy so the reflected light can be less than the incident light. This rule is usually called the normalization constraint. A final, less obvious principle obeyed by physical surfaces is Helmholtz reciprocity: if we pick two arbitrary directions, the same fraction of light should be reflected, no matter which is the incident direction and which is the outgoing direction. In other words,

Helmholtz reciprocityDue to Helmholtz reciprocity, some authors don't label the two directions in the BRDF as “in” and “out” because to be physically plausible the computation must be symmetric.

The BRDF contains the complete description of an object's appearance at a given point, since it describes how the surface will reflect light at that point. Clearly, a great deal of thought must be put into the design of this function. Numerous lighting models have been proposed over the last several decades, and what is surprising is that one of the earliest models, Blinn-Phong, is still in widespread use in real-time graphics today. Although it is not physically accurate (nor plausible: it violates the normalization constraint), we study it because it is a good educational stepping stone and an important bit of graphics history. Actually, describing Blinn-Phong as “history” is wishful thinking—perhaps the most important reason to study this model is that it still is in such widespread use! In fact, it's the best example of the phenomena we mentioned at the start of this chapter: particular methods being presented as if they are “the way graphics work.”

Different lighting models have different goals. Some are better at simulating rough surfaces, others at surfaces with multiple strata. Some focus on providing intuitive “dials” for artists to control, without concern for whether those dials have any physical significance at all. Others are based on taking real-world surfaces and measuring them with special cameras called goniophotometers, essentially sampling the BRDF and then using interpolation to reconstruct the function from the tabulated data. The notable Blinn-Phong model discussed in Section 10.6 is useful because it is simple, inexpensive, and well understood by artists. Consult the sources in the suggested reading for a survey of lighting models.

10.1.3A Very Brief Introduction to Colorimetry

and Radiometry

Graphics is all about measuring light, and you should be aware of some important subtleties, even though we won't have time to go into complete detail here. The first is how to measure the color of light, and the second is how to measure its brightness.

In your middle school science classes you might have learned that every color of light is some mixture of red, green, and blue (RGB) light. This is the popular conception of light, but it's not quite correct. Light can take on any single frequency in the visible band, or it might be a combination of any number of frequencies. Color is a phenomena of human perception and is not quite the same thing as frequency. Indeed different combinations of frequencies of light can be perceived as the same color—these are known as metamers. The infinite combinations of frequencies of light are sort of like all the different chords that can be played on a piano (and also tones between the keys). In this metaphor our color perception is unable to pick out all the different individual notes, but instead, any given chord sounds to us like some combination of middle C, F, and G. Three color channels is not a magic number as far as physics is concerned, it's peculiar to human vision. Most other mammals have only two different types of receptors (we would call them “color blind”), and fish, reptiles, and birds have four types of color receptors (they would call us color blind).

However, even very advanced rendering systems project the continuous spectrum of visible light onto some discrete basis, most commonly, the RGB basis. This is a ubiquitous simplification, but we still wanted to let you know that it is a simplification, as it doesn't account for certain phenomena. The RGB basis is not the only color space, nor is it necessarily the best one for many purposes, but it is a very convenient basis because it is the one used by most display devices. In turn, the reason that this basis is used by so many display devices is due to the similarity to our own visual system. Hall [11] does a good job of describing the shortcomings of the RGB system.

Since the visible portion of the electromagnetic spectrum is continuous, an expression such as

- To describe the spectral distribution of light requires a continuous function, not just three numbers. However, to describe the human perception of that light, three numbers are essentially sufficient.

- The RGB system is a convenient color space, but it's not the only one, and not even the best one for many practical purposes. In practice, we usually treat light as being a combination of red, green, and blue because we are making images for human consumption.

You should also be aware of the different ways that we can measure the intensity of light. If we take a viewpoint from physics, we consider light as energy in the form of electromagnetic radiation, and we use units of measurement from the field of radiometry. The most basic quantity is radiant energy, which in the SI system is measured in the standard unit of energy, the joule (J). Just like any other type of energy, we are often interested in the rate of energy flow per unit time, which is known as power. In the SI system power is measured using the watt (W), which is one joule per second (1 W = 1 J/s). Power in the form of electromagnetic radiation is called radiant power or radiant flux. The term “flux,” which comes from the Latin fluxus for “flow,” refers to some quantity flowing across some cross-sectional area. Thus, radiant flux measures the total amount of energy that is arriving, leaving, or flowing across some area per unit time.

Imagine that a certain amount of radiant flux is emitted from a

Several equivalent terms exist for radiosity. First, note that we can measure the flux density (or total flux, for that matter) across any cross-sectional area. We might be measuring the radiant power emitted from some surface with a finite area, or the surface through which the light flows might be an imaginary boundary that exists only mathematically (for example, the surface of some imaginary sphere that surrounds a light source). Although in all cases we are measuring flux density, and thus the term “radiosity” is perfectly valid, we might also use more specific terms, depending on whether the light being measured is coming or going. If the area is a surface and the light is arriving on the surface, then the term irradiance is used. If light is being emitted from a surface, the term radiant exitance or radiant emittance is used. In digital image synthesis, the word “radiosity” is most often used to refer to light that is leaving a surface, having been either reflected or emitted.

When we are talking about the brightness at a particular point, we cannot use plain old radiant power because the area of that point is infinitesimal (essentially zero). We can speak of the flux density at a single point, but to measure flux, we need a finite area over which to measure. For a surface of finite area, if we have a single number that characterizes the total for the entire surface area, it will be measured in flux, but to capture the fact that different locations within that area might be brighter than others, we use a function that varies over the surface that will measure the flux density.

Now we are ready to consider what is perhaps the most central quantity we need to measure in graphics: the intensity of a “ray” of light. We can see why the radiosity is not the unit for the job by an extension of the ideas from the previous paragraph. Imagine a surface point surrounded by an emissive dome and receiving a certain amount of irradiance coming from all directions in the hemisphere centered on the local surface normal. Now imagine a second surface point experiencing the same amount of irradiance, only all of the illumination is coming from a single direction, in a very thin beam. Intuitively, we can see that a ray along this beam is somehow “brighter” than any one ray that is illuminating the first surface point. The irradiance is somehow “denser.” It is denser per unit solid area.

The idea of a solid area is probably new to some readers, but we can easily understand the idea

by comparing it to angles in the plane. A “regular” angle is measured (in radians) based on

the length of its projection onto the unit circle. In the same way, a solid angle measures the

area as projected onto the unit sphere surrounding the point. The SI unit for solid angle

is the

steradian, abbreviated “sr.” The complete sphere has

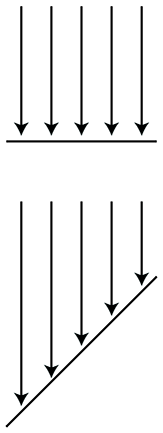

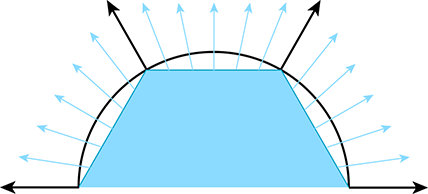

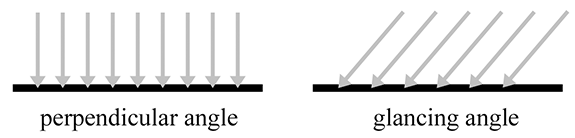

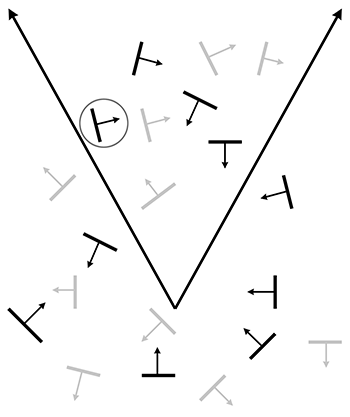

By measuring the radiance per unit solid angle, we can express the intensity of light at a certain point as a function that varies based upon the direction of incidence. We are very close to having the unit of measurement that describes the intensity of a ray. There is just one slight catch, illustrated by Figure 10.1, which is a close-up of a very thin pencil of light rays striking a surface. On the top, the rays strike the surface perpendicularly, and on the bottom, light rays of the same strength strike a different surface at an angle. The key point is that the area of the top surface is smaller than the area of the bottom surface; therefore, the irradiance on the top surface is larger than the irradiance on the bottom surface, despite the fact that the two surfaces are being illuminated by the “same number” of identical light rays. This basic phenomenon, that the angle of the surface causes incident light rays to be spread out and thus contribute less irradiance, is known as Lambert's law. We have more to say about Lambert's law in Section 10.6.3, but for now, the key idea is that the contribution of a bundle of light to the irradiance at a surface depends on the angle of that surface.

Due to Lambert's law, the unit we use in graphics to measure the strength of a ray, radiance, is defined as the radiant flux per unit projected area, per unit solid angle. To measure a projected area, we take the actual surface area and project it onto the plane perpendicular to the ray. (In Figure 10.1, imagine taking the bottom surface and projecting it upwards onto the top surface). Essentially this counteracts Lambert's law.

Table 10.1 summarizes the most important radiometric terms.

| Quantity | Units | SI unit | Rough translation |

| Radiant energy | Energy |

|

Total illumination duringan interval of time |

| Radiant flux | Power | Brightness of a finite areafrom all directions | |

| Radiant flux density | Power per unit area | Brightness of a single pointfrom all directions | |

| Irradiance | Power per unit area | Radiant flux density ofincident light | |

| Radiant exitance | Power per unit area | Radiant flux density ofemitted light | |

| Radiosity | Power per unit area | Radiant flux density ofemitted or reflected light | |

| Radiance | Power per unit projected area, perunit solid angle | Brightness of a ray |

Whereas radiometry takes the perspective of physics by measuring the raw energy of the light, the field of photometry weighs that same light using the human eye. For each of the corresponding radiometric terms, there is a similar term from photometry (Table 10.2). The only real difference is a nonlinear conversion from raw energy to perceived brightness.

| Radiometric term | Photometric term | SI Photometric unit |

| Radiant energy | Luminous energy | talbot, or lumen second ( |

| Radiant flux | Luminous flux, luminous power | lumen ( |

| Irradiance | Illuminance | lux ( |

| Radiant exitance | Luminous emittance | lux ( |

| Radiance | Luminance |

Throughout the remainder of this chapter, we try to use the proper radiometric units when possible. However, the practical realities of graphics make using proper units confusing, for two particular reasons. It is common in graphics to need to take some integral over a “signal”—for example, the color of some surface. In practice we cannot do the integral analytically, and so we must integrate numerically, which boils down to taking a weighted average of many samples. Although mathematically we are taking a weighted average (which ordinarily would not cause the units to change), in fact what we are doing is integrating, and that means each sample is really being multiplied by some differential quantity, such as a differential area or differential solid angle, which causes the physical units to change. A second cause of confusion is that, although many signals have a finite nonzero domain in the real world, they are represented in a computer by signals that are nonzero at a single point. (Mathematically, we say that the signal is a multiple of a Direc delta; see Section 12.4.3.) For example, a real-world light source has a finite area, and we would be interested in the radiance of the light at a given point on the emissive surface, in a given direction. In practice, we imagine shrinking the area of this light down to zero while holding the radiant flux constant. The flux density becomes infinite in theory. Thus, for a real area light we would need a signal to describe the flux density, whereas for a point light, the flux density becomes infinite and we instead describe the brightness of the light by its total flux. We'll repeat this information when we talk about point lights.

- Vague words such as “intensity” and “brightness” are best avoided when the more specific radiometric terms can be used. The scale of our numbers is not that important and we don't need to use real world SI units, but it is helpful to understand what the different radiometric quantities measure to avoid mixing quantities together inappropriately.

- Use radiant flux to measure the total brightness of a finite area, in all directions.

- Use radiant flux density to measure the brightness at a single point, in all directions. Irradiance and radiant exitance refer to radiant flux density of light that is incident and emitted, respectively. Radiosity is the radiant flux density of light that is leaving a surface, whether the light was reflected or emitted.

- Due to Lambert's law, a given ray contributes more differential irradiance when it strikes a surface at a perpendicular angle compared to a glancing angle.

- Use radiance to measure the brightness of a ray. More specifically, radiance is the flux per unit projected angle, per solid angle. We use projected area so that the value for a given ray is a property of a ray alone and does not depend on the orientation of the surface used to measure the flux density.

- Practical realities thwart our best intentions of doing things “the right way” when it comes to using proper units. Numerical integration is a lot like taking a weighted average, which hides the change of units that really occurs. Point lights and other Dirac deltas add further confusion.

10.1.4The Rendering Equation

Now let's fit the BRDF into the rendering algorithm. In step 2 of our rendering algorithm

(Section 10.1), we're trying to determine the radiance leaving a particular

surface in the direction of our eye. The only way this can happen is for light to arrive from

some direction onto the surface and get reflected in our direction. With the BRDF, we now have a

way to measure this. Consider all the potential directions that light might be incident upon the

surface, which form a hemisphere centered on

As fundamental as Equation (10.1) may be, its development is relatively recent, having been published in SIGGRAPH in 1986 by Kajiya [13]. Furthermore, it was the result of, rather than the cause of, numerous strategies for producing realistic images. Graphics researchers pursued the creation of images through different techniques that seemed to make sense to them before having a framework to describe the problem they were trying to solve. And for many years after that, most of us in the video game industry were unaware that the problem we were trying to solve had finally been clearly defined. (Many still are.)

Now let's convert this equation into English and see what the heck it means. First of all,

notice that

The term

On the right-hand side, we have a sum. The first term in the sum

Now let's dig into that intimidating integral. (By the way, if you haven't had calculus and haven't read Chapter 11 yet, just replace the word “integral” with “sum,” and you won't miss any of the main point of this section.) We've actually already discussed how it works when we talked about the BRDF, but let's repeat it with different words. We might rewrite the integral as

Note that symbol

Now all that is left is to dissect the integrand. It's a product of three factors:

The first factor denotes the radiance incident from the direction of

In purely mathematical terms, the rendering equation is an integral equation: it states a

relationship between some unknown function

To render a scene realistically, we must solve the rendering equation, which requires us to know (in theory) not only the radiance arriving at the camera, but also the entire distribution of radiance in the scene in every direction at every point. Clearly, this is too much to ask for a finite, digital computer, since both the set of surface locations and the set of potential incident/exiting directions are infinite. The real art in creating software for digital image synthesis is to allocate the limited processor time and memory most efficiently, to make the best possible approximation.

The simple rendering pipeline we present in Section 10.10 accounts only for direct light. It doesn't account for indirect light that bounced off of one surface and arrived at another. In other words, it only does “one recursion level” in the rendering equation. A huge component of realistic images is accounting for the indirect light—solving the rendering equation more completely. The various methods for accomplishing this are known as global illumination techniques.

This concludes our high-level presentation of how graphics works. Although we admit we have not yet presented a single practical idea, we believe it's very important to understand what you are trying to approximate before you start to approximate it. Even though the compromises we are forced to make for the sake of real-time are quite severe, the available computing power is growing. A video game programmer whose only exposure to graphics has been OpenGL tutorials or demos made by video card manufacturers or books that focused exclusively on real-time rendering will have a much more difficult time understanding even the global illumination techniques of today, much less those of tomorrow.

10.2Viewing in 3D

Before we render a scene, we must pick a camera and a window. That is, we must decide where to render it from (the view position, orientation, and zoom) and where to render it to (the rectangle on the screen). The output window is the simpler of the two, and so we will discuss it first.

Section 10.2.1 describes how to specify the output window. Section 10.2.2 discusses the pixel aspect ratio. Section 10.2.3 introduces the view frustum. Section 10.2.4 describes field of view angles and zoom.

10.2.1Specifying the Output Window

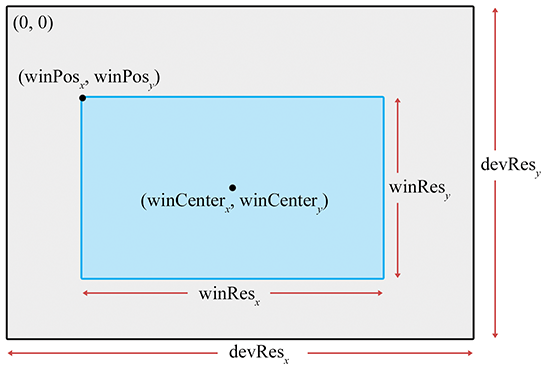

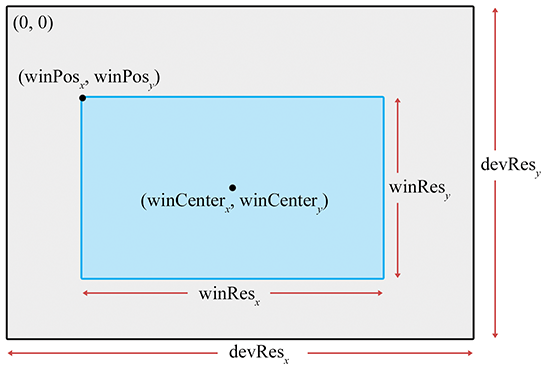

We don't have to render our image to the entire screen. For example, in split-screen multiplayer games, each player is given a portion of the screen. The output window refers to the portion of the output device where our image will be rendered. This is shown in Figure 10.2.

The position of the window is specified by the coordinates of the upper left-hand pixel

With that said, it is important to realize that we do not necessarily have to be rendering to the screen at all. We could be rendering into a buffer to be saved into a .TGA file or as a frame in an .AVI, or we may be rendering into a texture as a subprocess of the “main” render, to produce a shadow map, or a reflection, or the image on a monitor in the virtual world. For these reasons, the term render target is often used to refer to the current destination of rendering output.

10.2.2Pixel Aspect Ratio

Regardless of whether we are rendering to the screen or an off-screen buffer, we must know the aspect ratio of the pixels, which is the ratio of a pixel's height to its width. This ratio is often 1:1—that is, we have “square” pixels—but this is not always the case! We give some examples below, but it is common for this assumption to go unquestioned and become the source of complicated kludges applied in the wrong place, to fix up stretched or squashed images.

The formula for computing the aspect ratio is

Computing the pixel aspect ratio

The notation

But, as mentioned already, we often deal with square pixels with an aspect ratio of 1:1. For

example, on a desktop monitor with a physical width:height ratio of 4:3, some common resolutions

resulting in square pixel ratios are

Notice that nowhere in these calculations is the size or location of the window used; the location and size of the rendering window has no bearing on the physical proportions of a pixel. However, the size of the window will become important when we discuss field of view in Section 10.2.4, and the position is important when we map from camera space to screen space Section 10.3.5.

At this point, some readers may be wondering how this discussion makes sense in the context of

rendering to a bitmap, where the word “physical” implied by the variable names

10.2.3The View Frustum

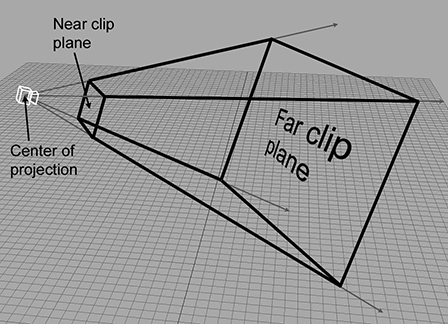

The view frustum is the volume of space that is potentially visible to the camera. It is shaped like a pyramid with the tip snipped off. An example of a view frustum is shown in Figure 10.3.

The view frustum is bounded by six planes, known as the clip planes. The first four of

the planes form the sides of the pyramid and are called the top, left, bottom, and right planes,

for obvious reasons. They correspond to the sides of the output window. The near and far clip

planes, which correspond to certain camera-space values of

The reason for the far clip plane is perhaps easier to understand. It prevents rendering of

objects beyond a certain distance. There are two practical reasons why a far clip plane is

needed. The first is relatively easy to understand: a far clip plane can limit the number of

objects that need to be rendered in an outdoor environment. The second reason is slightly more

complicated, but essentially it has to do with how the depth buffer values are assigned. As an

example, if the

depth buffer entries are 16-bit fixed point, then the largest depth value that can

be stored is 65,535. The far clip establishes what (floating point)

Notice that each of the clipping planes are planes, with emphasis on the fact that they extend infinitely. The view volume is the intersection of the six half-spaces defined by the clip planes.

10.2.4Field of View and Zoom

A camera has position and orientation, just like any other object in the world. However, it also has an additional property known as field of view. Another term you probably know is zoom. Intuitively, you already know what it means to “zoom in” and “zoom out.” When you zoom in, the object you are looking at appears bigger on screen, and when you zoom out, the apparent size of the object is smaller. Let's see if we can develop this intuition into a more precise definition.

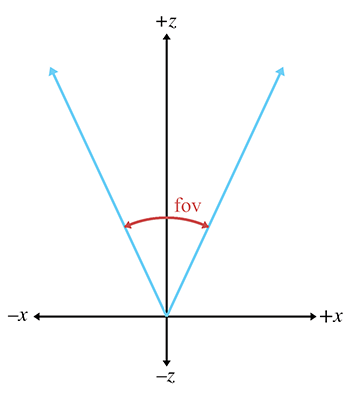

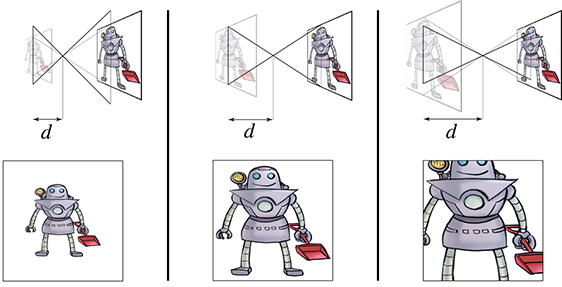

The field of view (FOV) is the angle that is intercepted by the view frustum. We actually need two angles: a horizontal field of view, and a vertical field of view. Let's drop back to 2D briefly and consider just one of these angles. Figure 10.4 shows the view frustum from above, illustrating precisely the angle that the horizontal field of view measures. The labeling of the axes is illustrative of camera space, which is discussed in Section 10.3.

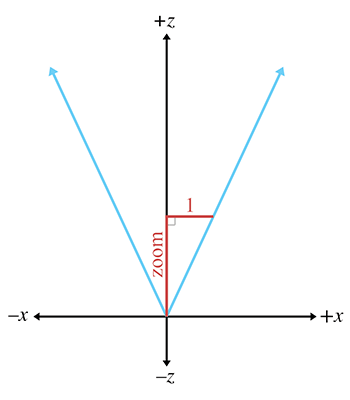

Zoom measures the ratio of the apparent size of the object relative to a

Zoom can be interpreted geometrically as shown in Figure 10.5. Using some basic trig, we can derive the conversion between zoom and field of view:

Converting between zoom and field of viewNotice the inverse relationship between zoom and field of view. As zoom gets larger, the field of view gets smaller, causing the view frustum to narrow. It might not seem intuitive at first, but when the view frustum gets more narrow, the perceived size of visible objects increases.

Field of view is a convenient measurement for humans to use, but as we discover in Section 10.3.4, zoom is the measurement that we need to feed into the graphics pipeline.

We need two different field of view angles (or zoom values), one horizontal and one vertical. We are certainly free to choose any two arbitrary values we fancy, but if we do not maintain a proper relationship between these values, then the rendered image will appear stretched. If you've ever watched a movie intended for the wide screen that was simply squashed anamorphically to fit on a regular TV, or watched content with a 4:3 aspect on a 16:9 TV in “full”8 mode, then you have seen this effect.

In order to maintain proper proportions, the zoom values must be inversely proportional to the physical dimensions of the output window:

The usual relationship between vertical and horizontal zoom

The variable

In this formula,

-

-

-

-

-

-

Many rendering packages allow you to specify only one field of view angle (or zoom value). When you do this, they automatically compute the other value for you, assuming you want uniform display proportions. For example, you may specify the horizontal field of view, and they compute the vertical field of view for you.

Now that we know how to describe zoom in a manner suitable for consumption by a computer, what do we do with these zoom values? They go into the clip matrix, which is described in Section 10.3.4.

10.2.5Orthographic Projection

The discussion so far has centered on perspective projection, which is the most commonly used type of projection, since that's how our eyes perceive the world. However, in many situations orthographic projection is also useful. We introduced orthographic projection in Section 5.3; to briefly review, in orthographic projection, the lines of projection (the lines that connect all the points in space that project onto the same screen coordinates) are parallel, rather than intersecting at a single point. There is no perspective foreshortening in orthographic projection; an object will appear the same size on the screen no matter how far away it is, and moving the camera forward or backward along the viewing direction has no apparent effect so long as the objects remain in front of the near clip plane.

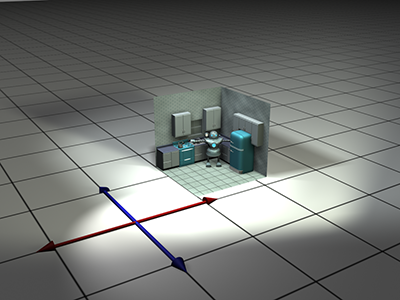

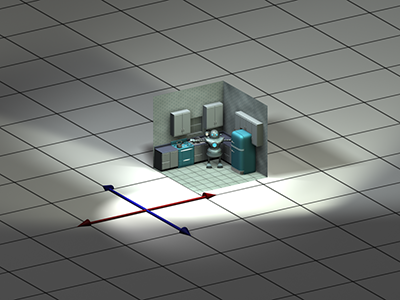

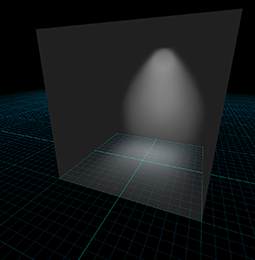

Figure 10.6 shows a scene rendered from the same position and orientation, comparing perspective and orthographic projection. On the left, notice that with perspective projection, parallel lines do not remain parallel, and the closer grid squares are larger than the ones in the distance. Under orthographic projection, the grid squares are all the same size and the grid lines remain parallel.

|

|

| Perspective projection | Orthographic projection |

Orthographic views are very useful for “schematic” views and other situations where distances and angles need to be measured precisely. Every modeling tool will support such a view. In a video game, you might use an orthographic view to render a map or some other HUD element.

For an orthographic projection, it makes no sense to speak of the “field of view” as an angle,

since the view frustum is shaped like a box, not a pyramid. Rather than defining the

The zoom value has a different meaning in orthographic projection compared to perspective. It is related to the physical size of the frustum box:

Converting between zoom and frustum size in orthographic projection

As with perspective projections, there are two different zoom values, one for

10.3Coordinate Spaces

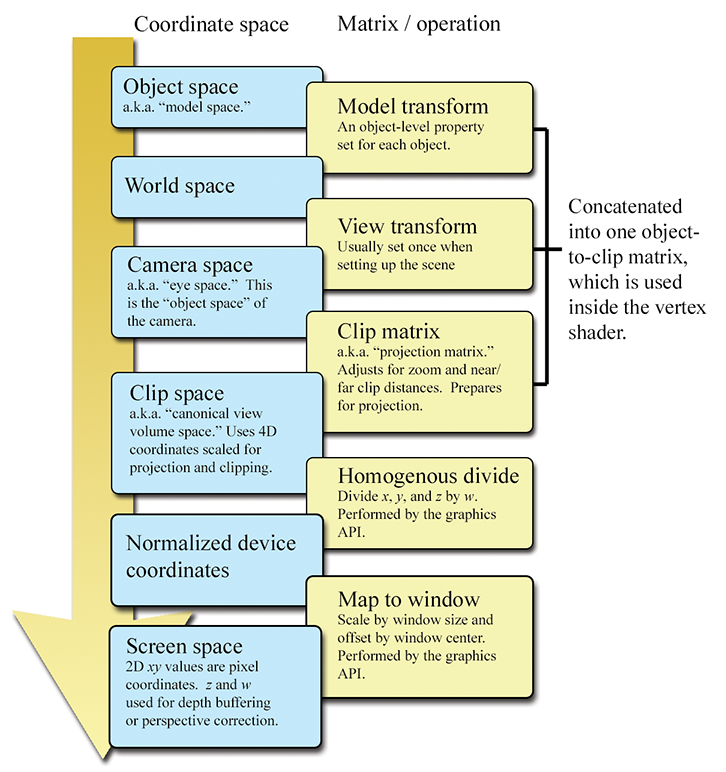

This section reviews several important coordinate spaces related to 3D viewing. Unfortunately, terminology is not consistent in the literature on the subject, even though the concepts are. Here, we discuss the coordinate spaces in the order they are encountered as geometry flows through the graphics pipeline.

10.3.1Model, World, and Camera Space

The geometry of an object is initially described in object space, which is a coordinate space local to the object being described (see Section 3.2.2). The information described usually consists of vertex positions and surface normals. Object space is also known as local space and, especially in the context of graphics, model space.

From model space, the vertices are transformed into world space (see Section 3.2.1). The transformation from modeling space to world space is often called the model transform. Typically, lighting for the scene is specified in world space, although, as we see in Section 10.11, it doesn't really matter what coordinate space is used to perform the lighting calculations provided that the geometry and the lights can be expressed in the same space.

From world space, vertices are transformed by the view transform into camera space (see Section 3.2.3), also known as eye space and view space (not to be confused with canonical view volume space, discussed later). Camera space is a 3D coordinate space in which the origin is at the center of projection, one is axis parallel to the direction the camera is facing (perpendicular to the projection plane), one axis is the intersection of the top and bottom clip planes, and the other axis is the intersection of the left and right clip planes. If we assume the perspective of the camera, then one axis will be “horizontal” and one will be “vertical.”

In a left-handed world, the most common convention is to point

10.3.2Clip Space and the Clip Matrix

From camera space, vertices are transformed once again into clip space, also known as the canonical view volume space. The matrix that transforms vertices from camera space into clip space is called the clip matrix, also known as the projection matrix.

Up until now, our vertex positions have been “pure” 3D vectors—that is, they only had three

coordinates, or if they have a fourth coordinate, then

-

Prepare for projection. We put the proper value into

-

Apply zoom and prepare for clipping. We scale

10.3.3The Clip Matrix: Preparing for Projection

Recall from Section 6.4.1 that a 4D homogeneous vector is mapped

to the corresponding physical 3D vector by dividing by

The first goal of the clip matrix is to get the correct value into

If this was the only purpose of the clip matrix, to place the correct value into

Multiplying a vector of the form

At this point, many readers might very reasonably ask two questions. The first question might be,

“Why is this so complicated? This seems like a lot of work to accomplish what basically amounts

to just dividing by

The second question a reader might have is, “What happened to

To understand why

The vertical line on the left side of each diagram represents the film (or, for modern cameras,

the sensing element), which lies in the infinite plane of projection. Importantly, notice that

the film is the same height in each diagram. As we increase

Now let's look at what happens inside a computer. The “film” inside a computer is the rectangular portion of the projection plane that intersects the view frustum.9 Notice that if we increase the focal distance, the size of the projected image increases, just like it did in a real camera. However, inside a computer, the film actually increases by this same proportion, rather than the view frustum changing in size. Because the projected image and the film increase by the same proportion, there is no change to the rendered image or the apparent size of objects within thisimage.

In summary, zoom is always accomplished by changing the shape of the view frustum, whether we're

talking about a real camera or inside a computer. In a real camera, changing the focal length

changes the shape of the view frustum because the film stays the same size. However, in a

computer, adjusting the focal distance

Some software allow the user to specify the field of view by giving a focal length measured in millimeters. These numbers are in reference to some standard film size, almost always 35 mm film.

What about orthographic projection? In this case, we do not want to divide by

The next section fills in the rest of the clip matrix. But for now, the key point is that a

perspective projection matrix will always have a right-hand column of

Notice that multiplying the entire matrix by a constant factor doesn't have

any effect on the projected values

10.3.4The Clip Matrix: Applying Zoom and

Preparing for Clipping

The second goal of the clip matrix is to scale the

So the points inside the view volume satisfy

Any geometry that does not satisfy these equalities must be clipped to the view frustum. Clipping is discussed in Section 10.10.4.

To stretch things to put the top, left, right, and bottom clip planes in place, we scale the

Let

This clip matrix assumes a coordinate system with

Under these DirectX-style conventions, the points inside the view frustum satisfy the inequality

We can easily tell that the two matrices in

Equations (10.6) and

(10.7) are perspective projection

matrices because the right-hand column is

What about orthographic projection? The first and second columns of the projection matrix don't

change, and we know the fourth column will become

Alternatively, if we are using a DirectX-style range for the clip space

In this book, we prefer a left-handed convention and row vectors on the left, and all the

projection matrices so far assume those conventions. However, both of these choices differ from

the OpenGL convention, and we know that many readers may be working in environments that are

similar to OpenGL. Since this can be very confusing, let's repeat these matrices, but with the

right-handed, column-vector OpenGL conventions. We'll only discuss the

It's instructive to consider how to convert these matrices from one set of conventions to the

other. Because OpenGL uses column vectors, the first thing we need to do is transpose our

matrix. Second, the right-handed conventions have

The above procedure results in the following perspective projection matrix

Clip matrix for perspective projection assuming OpenGL conventionsand the orthographic projection matrix is

Clip matrix for orthographic projection assuming OpenGL conventions

So, for OpenGL conventions, you can tell whether a projection matrix is perspective or

orthographic based on the bottom row. It will be

Now that we know a bit about clip space, we can understand the need for the near clip plane. Obviously, there is a singularity precisely at the origin, where a perspective projection is not defined. (This corresponds to a perspective division by zero.) In practice, this singularity would be extremely rare, and however we wanted to handle it—say, by arbitrarily projecting the point to the center of the screen—would be OK, since putting the camera directly in a polygon isn't often needed in practice.

But projecting polygons onto pixels isn't the only issue. Allowing for arbitrarily small (but

positive) values of

10.3.5Screen Space

Once we have clipped the geometry to the view frustum, it is projected into screen space, which corresponds to actual pixels in the frame buffer. Remember that we are rendering into an output window that does not necessarily occupy the entire display device. However, we usually want our screen-space coordinates to be specified using coordinates that are absolute to the rendering device (Figure 10.9).

Screen space is a 2D space, of course. Thus, we must project the points from clip space to

screen space to generate the correct 2D coordinates. The first thing that happens is the

standard homogeneous division by

A quick comment is warranted about the negation of the

Speaking of

An alternative strategy, known as

The

On modern graphics APIs at the time of this writing, the conversion of vertex coordinates from clip space to screen space is done for you. Your vertex shader outputs coordinates in clip space. The API clips the triangles to the view frustum and then projects the coordinates to screen space. But that doesn't mean that you will never use the equations in this section in your code. Quite often, we need to perform these calculations in software for visibility testing, level-of-detail selection, and so forth.

10.3.6Summary of Coordinate Spaces

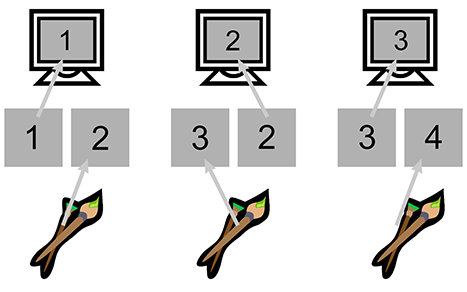

Figure 10.10 summarizes the coordinate spaces and matrices discussed in this section, showing the data flow from object space to screen space.

The coordinate spaces we've mentioned are the most important and common ones, but other coordinate spaces are used in computer graphics. For example, a projected light might have its own space, which is essentially the same as camera space, only it is from the perspective that the light “looks” onto the scene. This space is important when the light projects an image (sometimes called a gobo) and also for shadow mapping to determine whether a light can “see” a given point.

Another space that has become very important is tangent space, which is a local space on the surface of an object. One basis vector is the surface normal and the other two basis vectors are locally tangent to the surface, essentially establishing a 2D coordinate space that is “flat” on the surface at that spot. There are many different ways we could determine these basis vectors, but by far the most common reason to establish such a coordinate space is for bump mapping and related techniques. A more complete discussion of tangent space will need to wait until after we discuss texture mapping in Section 10.5, so we'll come back to this subject in Section 10.9.1. Tangent space is also sometimes called surface-localspace.

10.4Polygon Meshes

To render a scene, we need a mathematical description of the geometry in that scene. Several different methods are available to us. This section focuses on the one most important for real-time rendering: the triangle mesh. But first, let's mention a few alternatives to get some context. Constructive solid geometry (CSG) is a system for describing an object's shape using Boolean operators (union, intersection, subtraction) on primitives. Within video games, CSG can be especially useful for rapid prototyping tools, with the Unreal engine being a notable example. Another technique that works by modeling volumes rather than their surfaces is metaballs, sometimes used to model organic shapes and fluids, as was discussed in Section 9.1. CSG, metaballs, and other volumetric descriptions are very useful in particular realms, but for rendering (especially real-time rendering) we are interested in a description of the surface of the object, and seldom need to determine whether a given point is inside or outside this surface. Indeed, the surface need not be closed or even define a coherent volume.

The most common surface description is the polygon mesh, of which you are probably already aware. In certain circumstances, it's useful to allow the polygons that form the surface of the object to have an arbitrary number of vertices; this is often the case in importing and editing tools. For real-time rendering, however, modern hardware is optimized for triangle meshes, which are polygon meshes in which every polygon is a triangle. Any given polygon mesh can be converted into an equivalent triangle mesh by decomposing each polygon into triangles individually, as was discussed briefly in Section 9.7.3. Please note that many important concepts introduced in the context of a single triangle or polygon were covered in Section 9.6 and Section 9.7, respectively. Here, our focus is on how more than one triangle can be connected in a mesh.

One very straightforward way to store a triangle mesh would be to use an array of triangles, as shown in Listing 10.4.

struct Triangle {

Vector3 vertPos[3]; // vertex positions

};

struct TriangleMesh {

int triCount; // number of triangles

Triangle *triList; // array of triangles

};

For some applications this trivial representation might be adequate. However, the term “mesh” implies a degree of connectivity between adjacent triangles, and this connectivity is not expressed in our trivial representation. There are three basic types of information in a triangle mesh:

- Vertices. Each triangle has exactly three vertices. Each vertex may be shared by multiple triangles. The valence of a vertex refers to how many faces are connected to the vertex.

- Edges. An edge connects two vertices. Each triangle has three edges. In many cases, each edge is shared by exactly two faces, but there are certainly exceptions. If the object is not closed, an open edge with only one neighboring face can exist.

- Faces. These are the surfaces of the triangles. We can store a face as either a list of three vertices, or a list of three edges.

A variety of methods exist to represent this information efficiently, depending on the operations to be performed most often on the mesh. Here we will focus on a standard storage format known as an indexed triangle mesh.

10.4.1Indexed Triangle Mesh

An indexed triangle mesh consists of two lists: a list of vertices, and a list of triangles.

- Each vertex contains a position in 3D. We may also store other information at the vertex level, such as texture-mapping coordinates, surface normals, or lighting values.

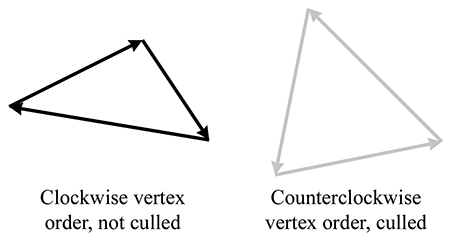

- A triangle is represented by three integers that index into the vertex list. Usually, the order in which these vertices are listed is significant, since we may consider faces to have “front” and “back” sides. We adopt the left-handed convention that the vertices are listed in clockwise order when viewed from the front side. Other information may also be stored at the triangle level, such as a precomputed normal of the plane containing the triangle, surface properties (such as a texture map), and so forth.

Listing 10.5 shows a highly simplified example of how an indexed triangle mesh might be stored in C.

// struct Vertex is the information we store at the vertex level

struct Vertex {

// 3D position of the vertex

Vector3 pos;

// Other information could include

// texture mapping coordinates, a

// surface normal, lighting values, etc.

};

// struct Triangle is the information we store at the triangle level

struct Triangle {

// Indices into the vertex list. In practice, 16-bit indices are

// almost always used rather than 32-bit, to save memory and bandwidth.

int vertexIndex[3];

// Other information could include

// a normal, material information, etc

};

// struct TriangleMesh stores an indexed triangle mesh

struct TriangleMesh {

// The vertices

int vertexCount;

Vertex *vertexList;

// The triangles

int triangleCount;

Triangle *triangleList;

};

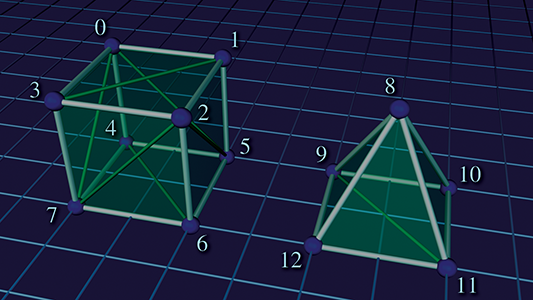

Figure 10.11 shows how a cube and a pyramid might be represented as a polygon mesh or a triangle mesh. Note that both objects are part of a single mesh with 13 vertices. The lighter, thicker wires show the outlines of polygons, and the thinner, dark green wires show one way to add edges to triangulate the polygon mesh.

Assuming the origin is on the “ground” directly between the two objects, the vertex coordinates might be as shown in Table 10.3.

| 0 | 4 | 8 | 12 | ||||

| 1 | 5 | 9 | |||||

| 2 | 6 | 10 | |||||

| 3 | 7 | 11 |

Table 10.4 shows the vertex indices that would form faces of this mesh, either as a polygon mesh or as a triangle mesh. Remember that the order of the vertices is significant; they are listed in clockwise order when viewed from the outside. You should study these figures until you are sure you understand them.

| Vertex indices | Vertex indices | |

| Description | (Polygon mesh) | (Triangle mesh) |

| Cube top | ||

| Cube front | ||

| Cube right | ||

| Cube left | ||

| Cube back | ||

| Cube bottom | ||

| Pyramid front | ||

| Pyramid left | ||

| Pyramid right | ||

| Pyramid back | ||

| Pyramid bottom |

The vertices must be listed in clockwise order around a face, but it doesn't matter which one is

considered the “first” vertex; they can be cycled without changing the logical structure of the

mesh. For example, the quad forming the cube top could equivalently have been given as

As indicated by the comments in Listing 10.5, additional data are almost always stored per vertex, such as texture coordinates, surface normals, basis vectors, colors, skinning data, and so on. Each of these is discussed in later sections in the context of the techniques that make use of the data. Additional data can also be stored at the triangle level, such as an index that tells which material to use for that face, or the plane equation (part of which is the surface normal—see Section 9.5) for the face. This is highly useful for editing purposes or in other tools that perform mesh manipulations in software. For real-time rendering, however, we seldom store data at the triangle level beyond the three vertex indices. In fact, the most common method is to not have a struct Triangle at all, and to represent the entire list of triangles simply as an array (e.g. unsigned short triList[] ), where the length of the array is the number of triangles times 3. Triangles with identical properties are grouped into batches so that an entire batch can be fed to the GPU in this optimal format. After we review many of the concepts that give rise to the need to store additional data per vertex, Section 10.10.2 looks at several more specific examples of how we might feed that data to the graphics API. By the way, as a general rule, things are a lot easier if you do not try to use the same mesh class for both rendering and editing. The requirements are very different, and a bulkier data structure with more flexibility is best for use in tools, importers, and the like.

Note that in an indexed triangle mesh, the edges are not stored explicitly, but rather the adjacency information contained in an indexed triangle list is stored implicitly: to locate shared edges between triangles, we must search the triangle list. Our original trivial “array of triangles” format in Listing 10.4 did not have any logical connectivity information (although we could have attempted to detect whether the vertices on an edge were identical by comparing the vertex positions or other properties). What's surprising is that the “extra” connectivity information contained in the indexed representation actually results in a reduction of memory usage in most cases, compared to the flat method. The reason for this is that the information stored at the vertex level, which is duplicated in the trivial flat format, is relatively large compared to a single integer index. (At a minimum, we must store a 3D vector position.) In meshes that arise in practice, a typical vertex has a valence of around 3–6, which means that the flat format duplicates quite a lot of data.

The simple indexed triangle mesh scheme is appropriate for many applications, including the very important one of rendering. However, some operations on triangle meshes require a more advanced data structure in order to be implemented more efficiently. The basic problem is that the adjacency between triangles is not expressed explicitly and must be extracted by searching the triangle list. Other representation techniques exist that make this information available in constant time. One idea is to maintain an edge list explicitly. Each edge is defined by listing the two vertices on the ends. We also maintain a list of triangles that share the edge. Then the triangles can be viewed as a list of three edges rather than a list of three vertices, so they are stored as three indices into the edge list rather than the vertex list. An extension of this idea is known as the winged-edge model [7], which also stores, for each vertex, a reference to one edge that uses the vertex. The edges and triangles may be traversed intelligently to quickly locate all edges and triangles that use the vertex.

10.4.2Surface Normals

Surface normals are used for several different purposes in graphics; for example, to compute proper lighting (Section 10.6), and for backface culling (Section 10.10.5). In general, a surface normal is a unit10 vector that is perpendicular to a surface. We might be interested in the normal of a given face, in which case the surface of interest is the plane that contains the face. The surface normals for polygons can be computed easily by using the techniques from Section 9.5.

Vertex-level normals are a bit trickier. First, it should be noted that, strictly speaking, there is not a true surface normal at a vertex (or an edge for that matter), since these locations mark discontinuities in the surface of the polygon mesh. Rather, for rendering purposes, we typically interpret a polygon mesh as an approximation to some smooth surface. So we don't want a normal to the piecewise linear surface defined by the polygon mesh; rather, we want (an approximation of) the surface normal of the smooth surface.

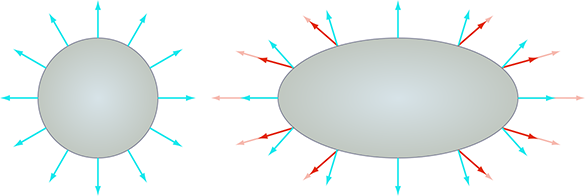

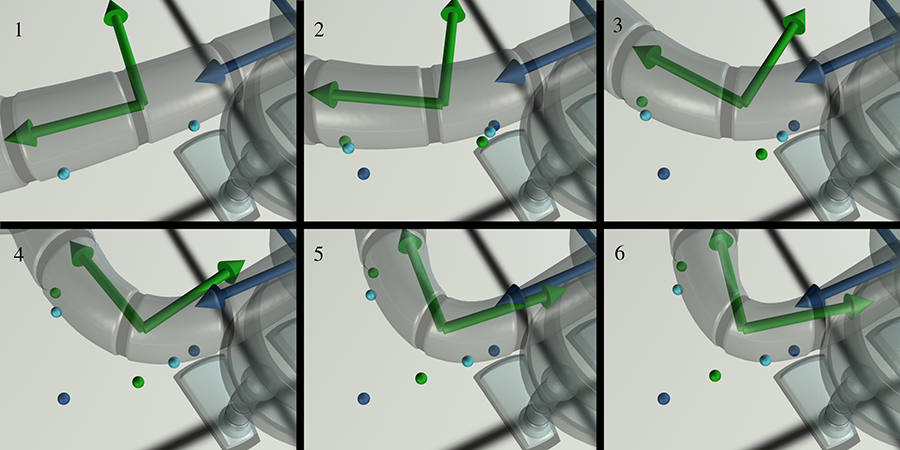

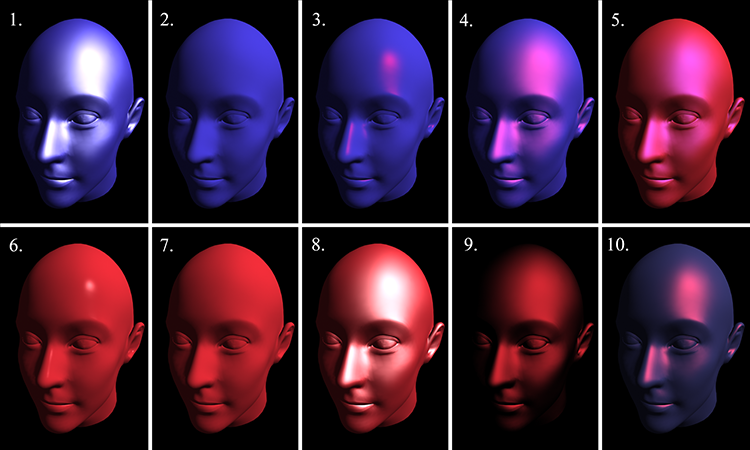

The primary purpose of vertex normals is lighting. Practically every lighting model takes a surface normal at the spot being lit as an input. Indeed, the surface normal is part of the rendering equation itself (in the Lambert factor), so it is always an input, even if the BRDF does not depend on it. We have normals available only at the vertices, but yet we need to compute lighting values over the entire surface. What to do? If hardware resources permit (as they usually do nowadays), then we can approximate the normal of the continuous surface corresponding to any point on a given face by interpolating vertex normals and renormalizing the result. This technique is illustrated in Figure 10.12, which shows a cross section of a cylinder (black circle) that is being approximated by a hexagonal prism (blue outline). Black normals at the vertices are the true surface normals, whereas the interior normals are being approximated through interpolation. (The actual normals used would be the result of stretching these out to unit length.)

Once we have a normal at a given point, we can perform the full lighting equation per pixel. This is known as per-pixel shading.11 An alternative strategy to per-pixel shading, known as Gouraud12 shading [9], is to perform lighting calculations only at the vertex level, and then interpolate the results themselves, rather than the normal, across the face. This requires less computation, and is still done on some systems, such as the Nintendo Wii.

Figure 10.13 shows per-pixel lighting of cylinders with a different number of sides. Although the illusion breaks down on the ends of the cylinder, where the silhouette edge gives away the low-poly nature of the geometry, this method of approximating a smooth surface can indeed make even a very low-resolution mesh look “smooth.” Cover up the ends of the cylinder, and even the 5-sided cylinder is remarkably convincing.

Now that we understand how normals are interpolated in order to approximately reconstruct a curved surface, let's talk about how to obtain vertex normals. This information may not be readily available, depending on how the triangle mesh was generated. If the mesh is generated procedurally, for example, from a parametric curved surface, then the vertex normals can be supplied at that time. Or you may simply be handed the vertex normals from the modeling package as part of the mesh. However, sometimes the surface normals are not provided, and we must approximate them by interpreting the only information available to us: the vertex positions and the triangles. One trick that works is to average the normals of the adjacent triangles, and then renormalize the result. This classic technique is demonstrated in Listing 10.6.

struct Vertex {

Vector3 pos;

Vector3 normal;

};

struct Triangle {

int vertexIndex[3];

Vector3 normal;

};

struct TriangleMesh {

int vertexCount;

Vertex *vertexList;

int triangleCount;

Triangle *triangleList;

void computeVertexNormals() {

// First clear out the vertex normals

for (int i = 0 ; i < vertexCount ; ++i) {

vertexList[i].normal.zero();

}

// Now add in the face normals into the

// normals of the adjacent vertices

for (int i = 0 ; i < triangleCount ; ++i) {

// Get shortcut

Triangle &tri = triangleList[i];

// Compute triangle normal.

Vector3 v0 = vertexList[tri.vertexIndex[0]].pos;

Vector3 v1 = vertexList[tri.vertexIndex[1]].pos;

Vector3 v2 = vertexList[tri.vertexIndex[2]].pos;

tri.normal = cross(v1-v0, v2-v1);

tri.normal.normalize();

// Sum it into the adjacent vertices

for (int j = 0 ; j < 3 ; ++j) {

vertexList[tri.vertexIndex[j]].normal += tri.normal;

}

}

// Finally, average and normalize the results.

// Note that this can blow up if a vertex is isolated

// (not used by any triangles), and in some other cases.

for (int i = 0 ; i < vertexCount ; ++i) {

vertexList[i].normal.normalize();

}

}

};

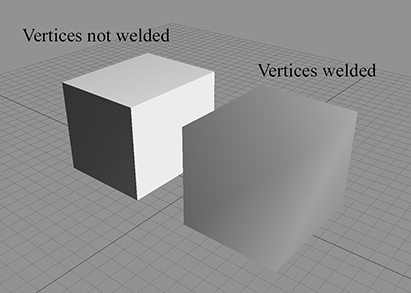

Averaging face normals to compute vertex normals is a tried-and-true technique that works well in most cases. However, there are a few things to watch out for. The first is that sometimes the mesh is supposed to have a discontinuity, and if we're not careful, this discontinuity will get “smoothed out.” Take the very simple example of a box. There should be a sharp lighting discontinuity at its edges. However, if we use vertex normals computed from the average of the surface normals, then there is no lighting discontinuity, as shown in Figure 10.14.

|

|

||||||||||||||||||||||||||||||||||||||||||||||

The basic problem is that the surface discontinuity at the box edges cannot be properly represented because there is only one normal stored per vertex. The solution to this problem is to “detach” the faces; in other words, duplicate the vertices along the edge where there is a true geometric discontinuity, creating a topological discontinuity to prevent the vertex normals from being averaged. After doing so, the faces are no longer logically connected, but this seam in the topology of the mesh doesn't cause a problem for many important tasks, such as rendering and raytracing. Table 10.5 shows a smoothed box mesh with eight vertices. Compare that mesh to the one in Table 10.6, in which the faces have been detached, resulting in 24 vertices.

|

|

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

An extreme version of this situation occurs when two faces are placed back-to-back. Such infinitely thin double-sided geometry can arise with foliage, cloth, billboards, and the like. In this case, since the normals are exactly opposite, averaging them produces the zero vector, which cannot be normalized. The simplest solution is to detach the faces so that the vertex normals will not average together. Or if the front and back sides are mirror images, the two “single-sided” polygons can be replaced by one “double-sided” one. This requires special treatment during rendering to disable backface culling (Section 10.10.5) and intelligently dealing with the normal in the lighting equation.

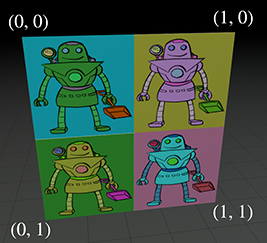

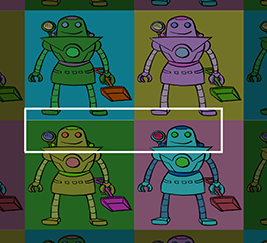

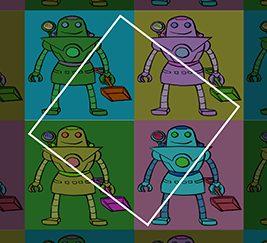

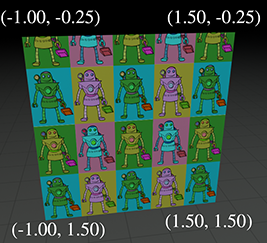

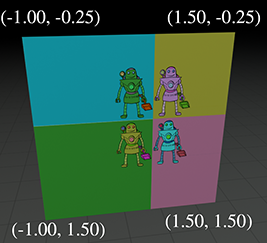

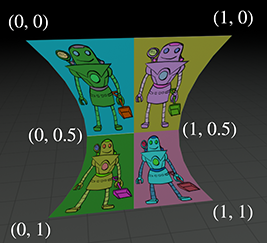

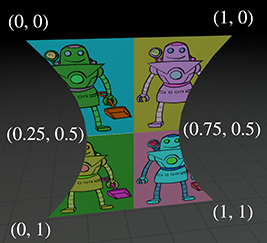

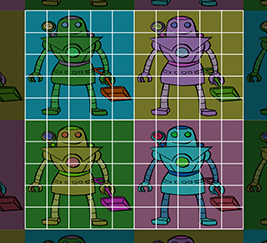

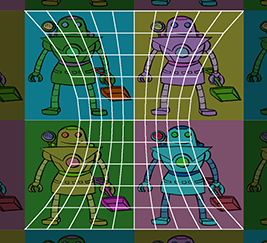

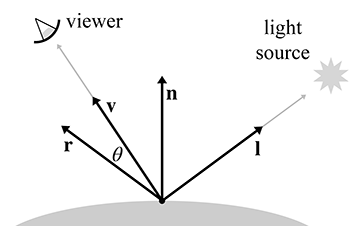

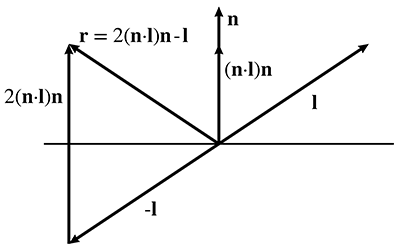

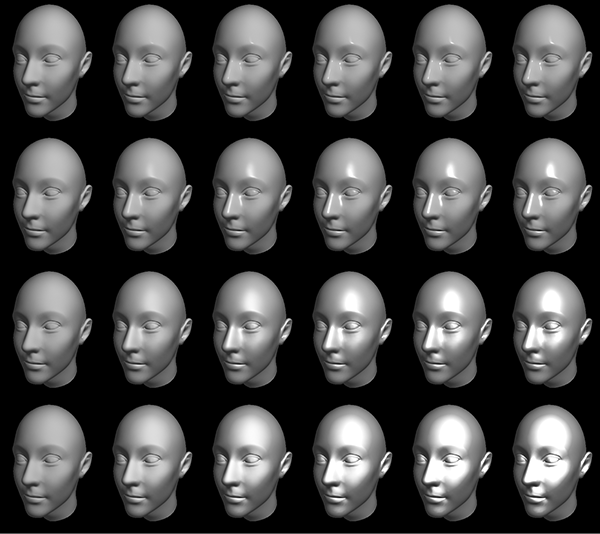

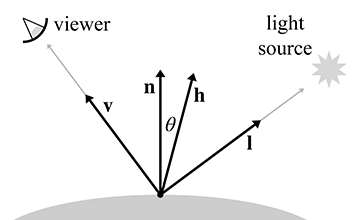

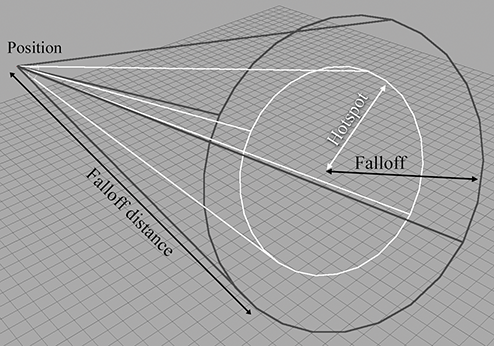

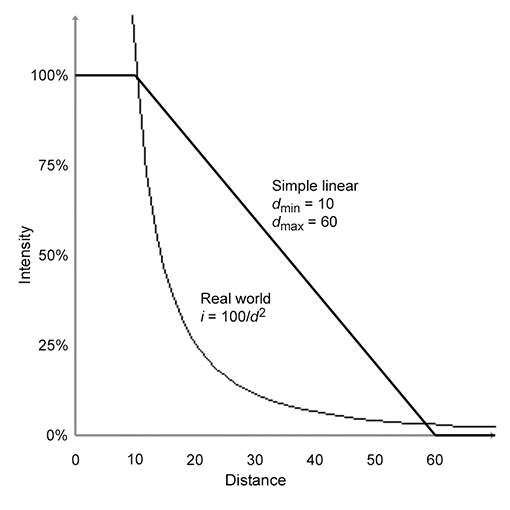

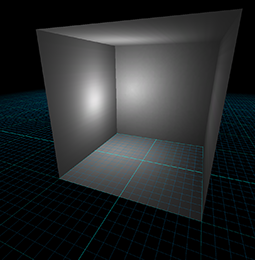

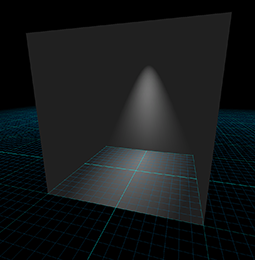

A more subtle problem is that the averaging is biased towards large numbers of triangles with the same normal. For example, consider the vertex at index 1 in Figure 10.11. This vertex is adjacent to two triangles on the top of the cube, but only one triangle on the right side and one triangle on the back side. The vertex normal computed by averaging the triangle normals is biased because the top face normal essentially gets twice as many “votes” as each of the side face normals. But this topology is the result of an arbitrary decision as to where to draw the edges to triangulate the faces of the cube. For example, if we were to triangulate the top face by drawing an edge between vertices 0 and 2 (this is known as “turning” the edge), all of the normals on the top face wouldchange.